In today's information age, news comes at us from every angle—social media platforms like Instagram, Facebook, and LinkedIn, search engines like Google, traditional news websites, emails, private messages, and ubiquitous advertisements.

That is the reality of our era of information. But how can we trust the avalanche of information we encounter daily?

![The Era of Automated Fake Information | Fake News 3.0]()

What is Fake News 3.0?

We're all familiar with the term "fake news," which refers to false information deliberately published with the intent to deceive. Then came "Fake News 2.0", enabled by advancements in AI technology. This version includes AI-generated falsehoods like deepfake videos, manipulated voice recordings, and fake images.

For example, deepfake-like technologies can make it appear as if former President Trump claims unicorns are real, or as if President Putin says, "The USA is far better than Russia."

This was the reality of our era a few years ago.

Now, let me introduce you to a new reality, a new concept: Fake News 3.0.

This term refers to automated, mass-generated disinformation, a form of deceit even more perilous than its predecessor. Imagine a world where even information from the most reputable sources needs to be scrutinized rigorously.

Not because these sources are deliberately misleading, but because they themselves may be victims of automated disinformation. So, even e-mails you read, news you watch, read & listen, can be false.

But how? Why is it really that dangerous?

Let’s see some examples.

Building a Fake News 3.0 Bot: An Experiment

I recently constructed a bot capable of generating fake news, not with malicious intent, but to explore the capabilities of AI and automation in this context. Then I said, what if?

As the Head of Digital at Tecnovy, one of my primary goals is to optimize our online presence through strategic backlinking and Search Engine Optimization (SEO). Common tools for this endeavor include platforms like HARO (Help a Reporter Out) and Terkel, which connect journalists with expert sources.

Drowning in a flood of HARO inquiries, I faced a bottleneck. The thought occurred to me: could automation be the key? Could I leverage GPT to respond to these requests in a way that wouldn't obviously be machine-generated?

To put this idea to the test, I employed Zapier for automation. While some might argue that a GPT-generated response could easily be spotted, the trick lies in finessing the output to make it indistinguishable from a human-crafted email.

Let’s see.

Human-Like Fake Mails on Autopilot in Just 8 Steps

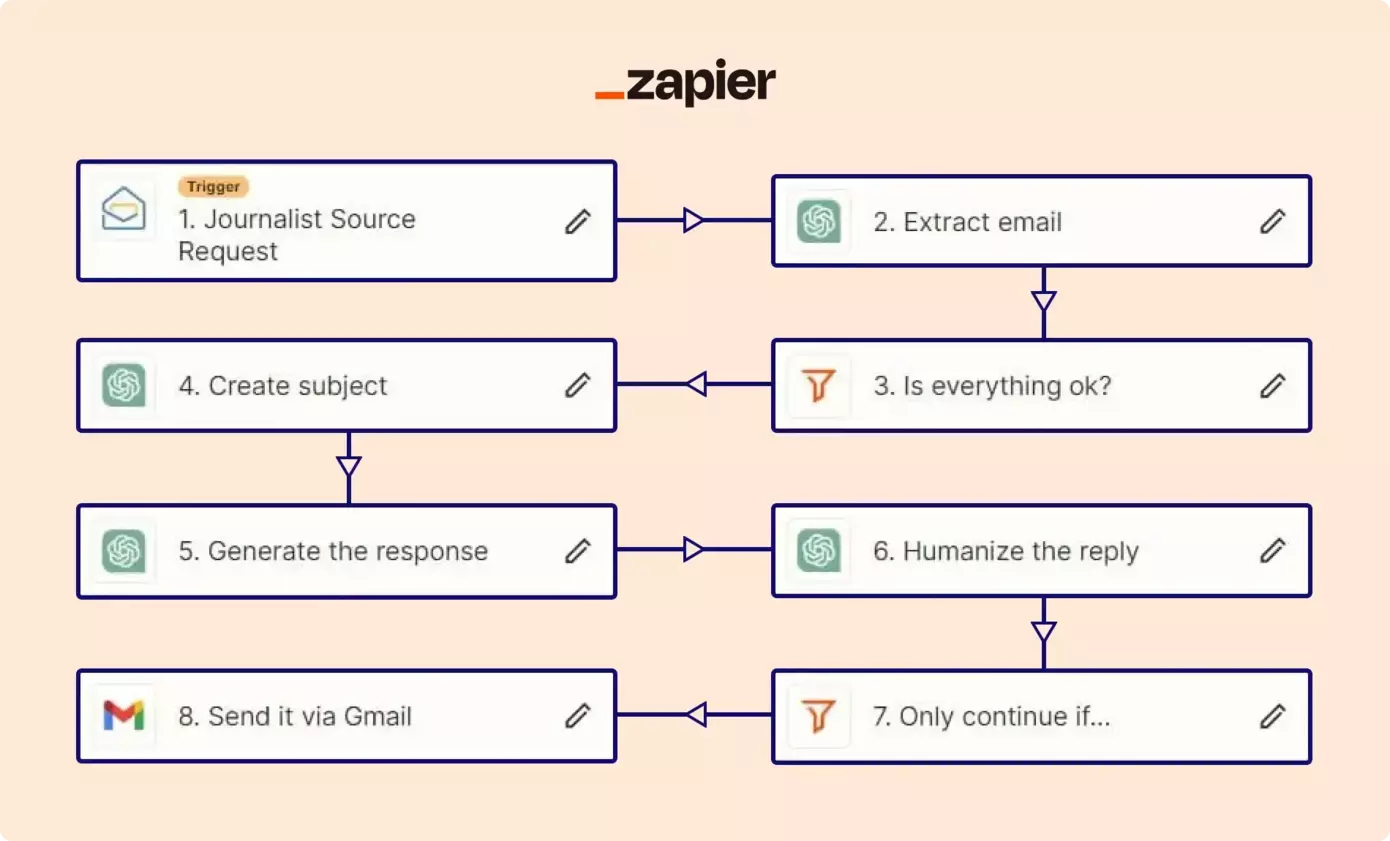

I created this bot in 8 steps. The prompts could have been improved and could be much better, but I haven't put that much time into it and it still works quite well. Let's examine each step and then review the results.

-

Journalist Source Request (See the screenshot.)

> The bot’s working sequence will be triggered if I get any new e-mail from HARO. -

Extract E-Mail (See the screenshot.)

> In this step we are extracting the journalist's e-mail from the raw data. -

Is everything ok? (See the screenshot.)

> Here we check if the extracted journalists e-mail is correct. If not, the sequence will be aborted. -

Create subject (See the screenshot.)

> We need an e-mail headline, right? We are creating it in this step and will use it later. -

Generate the response (See the screenshot.)

> Now we will create the e-mail body. It has a basic prompt. Humanization process comes after. -

Humanize the reply (See the screenshot.)

> They shouldn't be able to understand that my mail has been written by AI at first glance. To make things seem more natural, we humanize the body with a few human-like patterns. Emotions and common grammar mistakes. -

Only continue if… (See the screenshot.)

> Before sending the e-mail, we must check if we got everything right. If not, abort. -

Send it via Gmail (See the screenshot.)

> Place each data to where they belong, add the signature at the end and send it. So simple.

The bot processed 200+ mails. What were the results like?

The bot went through about 200+ emails. And guess what? The outcomes were illuminating. Let's put this into perspective: it took me a mere 3 hours to develop this bot. Now, imagine the potential if I had invested 300 hours—or if the person behind the bot had less benign intentions.

Example Result #1 (Click to see.)

We didn't introduce any AI-Chatbots last year. No improvements in satisfaction scores or response time. We didn't work with any freelancers, didn't save time, didn't see any boost.

Example Result #2 (Click to see.)

We don't offer any SaaS yet, nor have a tiered pricing strategy. We didn't also see any skyrocketing in our course registrations. I got to admit though, Adobe and Salesforce are good examples.

Example Result #3 (Click to see.)

We never had any eCommerce client, end of the story.

Example Result #4 (Click to see.)

This could be true! We started using social proof and it increased our conversion rate. However, we do not have any statistics on this. So... this information could actually almost be true. :)

Example Image of My Sent-Box (Click to see.)

The email subject lines are too similar to each other. I could randomize it a bit more, but I didn't care. They should be seen once per person anyway.

You were the victim, but a lucky one. (yet)

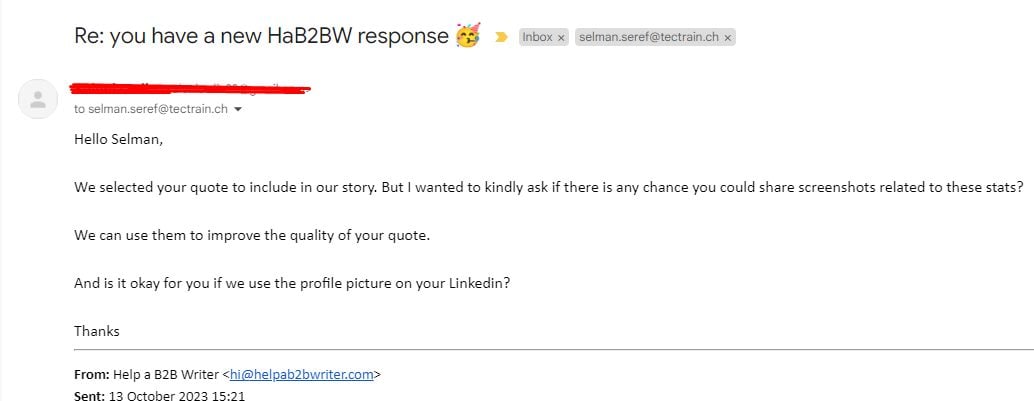

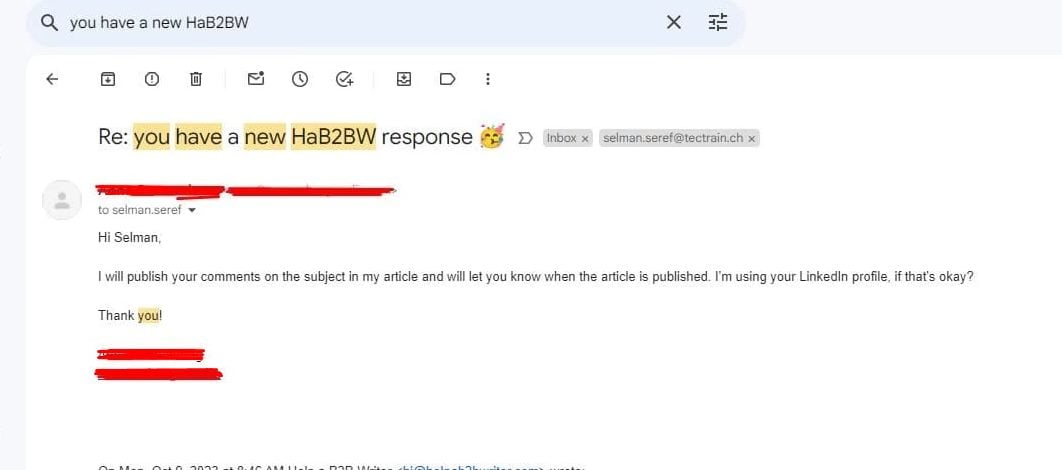

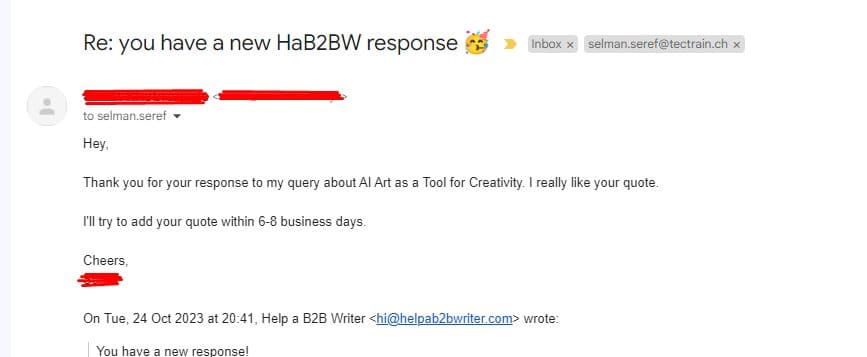

- Out of the 200+ emails I sent, I got back around 25. (Nearly 10%)

- Half of them wanted more info like stats or pictures.

- A couple said my answers were too vague.

- But get this, about 10 were good to go and wanted to put my comments in their stories!

Of course I had to tell them to hold off because it was all for a study. A few people laughed, a few people cried, most people were silent.

Response Example #1

Response Example #2

Response Example #3

Response Example #4

I didn't let anyone publish what I sent. I'm searching everyday online to see if my name shows up in any articles. If it does, I'll ask them to take it down.

I also did not reveal the full names of the journalists or the platforms on which they write. I don't want to harm anyone's career or reputation. This was just an experiment, nothing more. Yet again, that’s just me! :)

That was just the appetizer. What is possible?

What you've just seen is merely an appetizer; the full capabilities of Fake News 3.0 are much more concerning. Let me briefly mention a few options below. ?

![Automated Fake Article Networks]()

1- Automated Fake Article Networks

Oh, before explaining, I loved this Idea! I may even do it for passive income in the future. ?

- First, let's get the infrastructure: 100 WordPress sites, each with its own unique domain and language. I'll even use expired domains for instant SEO clout.

- Next, I assemble an automated system—a "strategy bot" that can devise hundreds of complex content strategies daily, storing them neatly in a database.

- Then comes the "content writer bot," tailored to access and utilize the strategy database. No more random generation; everything follows a plan to avoid keyword overlap.

- But wait, there’s more! Introducing the "editor bot," generating relevant images, structuring articles, and handling internal backlinking like a pro.

- Now, I hit the publish button. Not for one article. Not for one site. But for 100 articles across 100 domains, on autopilot, and up to 10,000 articles a day!

- As the cherry on top, I enable a translation plugin. These 10,000 articles are now available in dozens of languages.

Hold on. What’s the intent here? That’s the real kicker. Assume if it’s a government or a big company. Scale those numbers up and see the monumental impact it could have.

2- Deceptive Emails with Personal Data

Hey, data privacy, GDPR, etc., right? That’s not the case every time. As a regular internet user, I have access to millions of people's private data. Ever heard of Deepweb? They are being sold there. I mean official government data. Not the data you “implicitly” admitted to share.

We're talking phone numbers, names of your family members, addresses, workplaces, schools—everything. No hyperbole here. Despite regulatory efforts, data breaches happen, and public databases aren't necessarily Fort Knox.

So, who's more likely to pay top dollar for cybersecurity talent—a corporation or a government agency? (Corporation, see the research.) And who stands to make more money: a cybersecurity specialist preventing data breaches or a hacker profiting from them?

Enough controversies though. We know that data breaches are real anyway.

Now, imagine this:

-

I create an email bot, leveraging the personal data I've obtained.

-

I program this bot to craft a narrative based on your life details.

-

The resulting story serves as the backdrop for a scam or blackmail scheme, sent straight to your inbox.

Alright, now take it one step further.

![Fake News 3.0 Phone Scams]()

3- Phone Scams using Voice Cloning

We spoke about e-mails. What about phone calls?

-

First off, I acquire a wealth of data, just like the method previously discussed.

-

I set up a virtual call center, powered by AI, with an arsenal of virtual phone numbers at its disposal.

-

This bot initiates calls to your loved ones, striking up innocuous conversations or surveys about their day, school, or work.

-

Crucially, the bot records these exchanges, but that's merely the opening act for the real scam.

-

Using these recorded snippets, the bot generates voice profiles for your family members, capable of mimicking their speech patterns within a minute or less. Remember, we're not aiming for studio-quality audio; this is a phone call, and modest sound quality would suffice.

-

Fast-forward to you soaking up the sun one fine day. Your phone buzzes, you pick up, and on the other end is your distraught daughter, claiming she's been kidnapped and urgently needs bitcoin for her ransom.

Sounds like thriller-movie stuff? Think again. This isn't far-fetched; it's entirely plausible, as easy as making your dinner plans. The example I’ve given is basic; the reality could be far more complex and perilous. Yes, maybe you wouldn’t fall for this scam, but are you sure that your loved ones wouldn’t?

Plot-Twist: I didn't make this method up. I just explained the process of it. It's already out there, check this out.

![Manipulating Journalists | Fake News 3.0]()

4- Manipulating Journalism

Hold on, because this is a pivotal moment in understanding the state of journalism and digital information today. As you already know, I've engaged in providing fabricated quotes to journalists through platforms like HARO and TERKEL. Let me clarify—I'm neither a public relations expert nor a professional journalist. I am simply an individual fascinated by the frontiers of digital possibilities.

Consider this unnerving prospect:

-

Within a mere three days, I could assemble a comprehensive database containing the emails of thousands of journalists.

-

Yes, you heard correctly. These email addresses are already available for purchase, if one knows where to look.

-

Now, here's the nuanced part. Instead of sending one-size-fits-all pitches, I would meticulously categorize these journalists based on their coverage areas.

-

By examining their previously published articles and discerning their key interests, I could craft highly personalized emails.

-

These communications wouldn't just be targeted; they would resonate with each journalist's unique career and focus.

-

I can make up stories, profiles, case studies, statistics, sources, news… Made up, fake information on autopilot, steering the narrative in any direction I desire

But, why somebody should do this?

The aim would be to subtly control the narrative. This is not merely about gaining media attention; it's about sculpting public opinion from behind the scenes.

Remember! The point here isn't what I might do with such capabilities; it's to illustrate what could be done on a much larger scale by entities (?) with considerable resources and potentially dubious intentions.

Technology is morally neutral until we apply it. It's up to us to decide how we use it.

William Gibson

![AI Controlled Social Media Accounts]()

5- AI-Controlled Autonomous Social Media Bots

Just imagine this scenario, similar to the mind-bending examples I've already shared.

-

Think of an army of AI-powered bots on platforms like Twitter, Facebook, Instagram, LinkedIn, and TikTok.

-

These bots don't just tweet or post generic content; they act and interact as if they were real people.

-

They comment on trending topics, discuss the weather, share photos (image generation, e.g. DALLE, Midjourney) of their 'life,' and even recommend books.

But here's the jaw-dropping part. The moment the person behind these bots decides it's time to shift the narrative, the bots spring into action. They flood the social feeds with strategically chosen content, effectively changing trending topics and even the overarching narrative. To the casual observer, these accounts appear genuine—replete with a long history of plausible posts and images.

So what does this mean on a grand scale? We're not just talking about group dynamics; we're delving into the mechanics of mass belief manipulation.

A reliable way to make people believe in falsehoods is frequent repetition, because familiarity is not easily distinguished from truth.

Daniel Kahneman

The staggering implication isn't merely that Fake News 3.0 is automated; it's that this machinery can be scaled to an almost unfathomable extent. The lines between reality and fabrication blur, threatening to disrupt not just individual opinions but the very foundation of societal truth.

Is this too Black-Mirror-ish?

The scenarios I mentioned above were only the examples I came up with while writing this article, which took me a few hours in total. They can seem like black mirror-ish and not likely to happen at all.

But it’s more than likely, that it’s happening right now. I already know dozens of websites that are generating articles with GPT on autopilot to get clicks, hence ad-clicks, and earn money. (They do earn money, by the way. Thousands of dollars per month. Look it up.)

Even you can do it. And I am sure somebody else with more resources, time and dedication, can do it way much better than the examples I mentioned.

Evolving technology has opened up new horizons for us to explore, but… This reminds me of a quote from 'Sapiens':

...the Agricultural Revolution was a trap.

Yuval Noah Harari

Old Gatekeeping Algorithms Can’t Detect New AI Bots

Traditional security mechanisms like email firewalls, server firewalls, and spam detectors were designed for a different era. These systems operate based on known patterns to flag potentially harmful or false information.

However, generative AI has introduced a new layer of complexity by being able to mimic the characteristics of authentic messages and content.

In other words, AI's rapid evolution has effectively outpaced the capabilities of many existing gatekeeping systems. We can program AI to bypass these established patterns, rendering our emails, articles, and social media posts virtually indistinguishable from legitimate human-generated content.

Yes, there are increasingly sophisticated AI-powered detection systems being developed, but it's a never-ending arms race. As detection tools become more advanced, so do the capabilities of those seeking to manipulate or deceive.

This brings us to the core of the issue: technology alone can't be the ultimate safeguard. The most effective line of defense in this constantly evolving landscape is human awareness. New forms of media literacy will have to emerge, teaching us not only how to discern fact from fiction but also how to navigate in a world where the very fabric of 'truth' can be generated by an algorithm.

Beware of Fake News 3.0: So, what should we do?

With the rise of Fake News 3.0, deceptive information is not only omnipresent but also increasingly convincing. It's well-crafted to appear genuine and can easily eliminate the old security systems we've come to rely on.

So, how can you protect yourself? One of the most effective strategies is going back to the old-school foundational principles of skepticism and critical thinking. It's more important now than ever to not automatically believe everything you read or hear, no matter how authentic it seems. Take a moment to verify the information, cross-reference sources, and even question the credibility of those sources.

One challenge in doing so is the existence of cognitive biases that can cloud your judgment. For example, confirmation bias makes us more likely to believe information that aligns with our pre-existing beliefs. If you're deeply committed to a particular political stance, you might be more willing to believe news that supports that stance without questioning its authenticity.

Another example is the 'halo effect,' where we're likely to believe information coming from a source we admire or find credible, even if that information is false or misleading.

Being aware of such biases can help you approach news and information more objectively. Actively counteract these biases by seeking information from varied sources and being open to viewpoints that challenge your own.

Eventually, the best defense against this evolving threat is media literacy and education. Understanding the mechanics of media, from how news is generated to how it's disseminated, can equip you with the tools to discern fact from fiction.

In a world where even established journalists and respected news outlets fall victim to sophisticated fakes, a thorough understanding of media processes becomes our most reliable safeguard.

Media literacy isn't just a nice-to-have skill anymore; it's becoming an essential part of being an informed and responsible citizen.

The greatest enemy of knowledge is not ignorance, it is the illusion of knowledge.

Daniel J. Boorstin

Sources & Further Reading

-

Hunt, E. (2016, December 17). What is fake news? how to spot it and what you can do to stop it. The Guardian. https://www.theguardian.com/media/2016/dec/18/what-is-fake-news-pizzagate

-

Kahneman, D. (2011). Illusions of Truth. In Thinking, fast and slow (p. 63). essay, Penguin books.

-

Knight, W. (2020, April 2). Fake news 2.0: Personalized, optimized, and even harder to stop. MIT Technology Review. https://www.technologyreview.com/2018/03/27/3116/fake-news-20-personalized-optimized-and-even-harder-to-stop/

-

Martinez-Conde, S. (2019, October 5). I heard it before, so it must be true. Scientific American Blog Network. https://blogs.scientificamerican.com/illusion-chasers/i-heard-it-before-so-it-must-be-true/

-

Kelly, H. (2023, October 16). How to avoid falling for misinformation, AI images on social media. The Washington Post. https://www.washingtonpost.com/technology/2023/05/22/misinformation-ai-twitter-facebook-guide/

-

Kohli, A. (2023, April 29). Ai voice cloning is on the rise. here’s what to know. Time. https://time.com/6275794/ai-voice-cloning-scams-music/

- Cybenko, A.K., & Cybenko, G.V. (2018). AI and Fake News. IEEE Intelligent Systems, 33, 1-5.